Speech perception can be defined as the process in which sounds of language are heard, interpreted and understood. Speech is typically viewed as the primary way of communicating and often speech needs to be heard via the auditory sense in order to be understood. However speech is not simply spoken, it is multisensory, and for those who may be missing a sense, language can still be spoken. Starting with the basics of speech and a conversation between two people. When listening to the sounds or tone of voice a person uses you can usually gather a lot about the conversation as well as what the other person may be feeling, and this may aid you in the ability to respond back. Besides just using the auditory sense you can use your visual senses as well. When you and another person are having a conversation you often watch them a lot for visual cues that might also go along with what they are talking about. If a person is talking with a wide grin and they are moving their arms around frantically, you can come to the very quick assumption that they are excited or happy. Visual aids of body language are huge in having a conversation and understanding social cues.

Speech perception can be defined as the process in which sounds of language are heard, interpreted and understood. Speech is typically viewed as the primary way of communicating and often speech needs to be heard via the auditory sense in order to be understood. However speech is not simply spoken, it is multisensory, and for those who may be missing a sense, language can still be spoken. Starting with the basics of speech and a conversation between two people. When listening to the sounds or tone of voice a person uses you can usually gather a lot about the conversation as well as what the other person may be feeling, and this may aid you in the ability to respond back. Besides just using the auditory sense you can use your visual senses as well. When you and another person are having a conversation you often watch them a lot for visual cues that might also go along with what they are talking about. If a person is talking with a wide grin and they are moving their arms around frantically, you can come to the very quick assumption that they are excited or happy. Visual aids of body language are huge in having a conversation and understanding social cues.

Tuesday, June 20, 2023

Speech Perception: It's not all Talk

Tasting What You Smell: Connection Between Taste and Smell

Have you ever wondered why a plate of food may taste bland when you have a cold or stuffy nose? Or why certain smells can instantly bring you back to a specific memory? The answers lie in the relationship between taste and smell. These two senses work together creating our perception of flavor. “Smell mostly affects taste not from inhaling odors into the nostrils, but from the odors that enter your nasal passages through the back of your mouth.”(Rosenblum, 2010). This type of smell is called retronasal.

Taste and smell are distinct senses, but they are closely linked. Taste, which occurs on the tongue, allows us to detect basic sensations like sweet, sour, salty, bitter, and umami. On the other hand, smell, which takes place in the nose, enables us to identify and differentiate various odors.

When we eat or drink something, molecules from the food are released into the air in our mouth and travel up into our nasal cavity allowing odors to pass across your nasal passage. The olfactory receptors in our nose come into contact with these molecules, sending signals to the brain, which then combines the information from taste and smell to create the perception of flavor.

Photo Link: https://www.brainfacts.org/thinking-sensing-and-behaving/taste/2012/taste-and-smell

References: See What I'm Saying, Rosenblum, 2010

The Chameleon Effect

We imitate people all the time unconsciously. Have you ever noticed yourself beginning to sound or use the same mannerisms as the person you spend the most time with? Whenever we interact with people, we slightly mimic parts of their speech, facial expressions, and behavior. Humans have this natural tendency that contributes to achievements in perception, coordination, and social interactions. When we take in subtle information about others, it allows us to imitate people without our awareness.

Not only can body posture and verbal communication be mimicked, but so can our facial expressions, called covert facial mimicry. This is when we mirror others' emotional expressions. For example, mimicking a smile or worried look. Covert facial mimicry serves important functions, such as building social connections and empathizing with others' emotions. According to Rosenblum (2010), the chameleon effect is, “our unconscious tendency to mimic the postures, mannerisms, and facial expressions of other people” (p. 388). From just a few weeks after birth, we start imitating facial expressions, portraying our innate tendency to mimic others throughout our lives.

Although most of the time imitating is unconscious, there have been times I have noticed myself copying others whether it's in my mannerisms or how I speak. I agree that this can build social connections because it shows that we can relate to and understand other people.

Monday, June 19, 2023

The Rubber Hand Illusion

Our brains work in miraculous ways using our senses to determine many factors in our lives. The rubber hand illusion is a significant example that shows one's perception of touch. During this concept an individual is set a table with both palms face down in front of them, a wooden divider is then placed to the right of the left hand, the hand is then replaced with a rubber one, a cloth is placed over both the right arm and rubber arm. The setup of this illusion helps the individual focus specifically on the two hands in front of them. The experimenter would then take a brush a stroke the rubber hand and the hidden left hand synchronously. The movement of the brush has to be done at the exact same time for this to work otherwise the brain can see the difference through visual perception.

After the period of time of stoking the hands the individual's brain will see and feel as if the rubber hand is actually their left hand. To prove this point a sadistic act will take place such as a hammer making the fake hand or a violent bend to one of the fingers. This would cause a reaction out of the individual as their brain proceeded to the rubber one as their own. While this illusion does not create actual pain to the individual it causes an initial reaction when the fake hand is messed with. Our sense of touch in this case is effected through synchronized brush strokes showing that our visual perception is connected to what we feel.

Photo source: https://www.news18.com/news/buzz/when-brain-gets-hacked-doctor-hits-fake-hand-with-hammer-man-feels-pain-5530927.html

See What I'm Saying

The McGurk Effect

The McGurk effect is a concept that scientists have studied over the years to determine how our bodies perceive a sound that is being mouthed in front of us versus one being verbally said behind us. In other words, if an individual were to say the syllable ba behind us and then an individual were to mouth ga in front of us our brains would not perceive either of them. Our brains typically will find the middle ground between what we are seeing and hearing. In the instance above our brains would combine the two with a result of da.

With this perceptual phenomenon of speech perception, our brains are forming a third sound from the sound we are physically hearing and one that we are seeing. This concept has been studied and has shown it is more than just a multisensory perception. It is an automatic and unconscious response that happens when our visual and speech senses emerge together. This leads to a deeper understanding of the type of facial information that is required for visual speech perception. The McGurk effect has also been proven to work with different genders, recognizable faces and voices, and different languages making it a diverse illusion.

sources: See What I'm Saying

https://www.frontiersin.org/articles/10.3389/fpsyg.2014.00725/full

Photo source: https://kids.frontiersin.org/articles/10.3389/frym.2022.705956

Proxy Touch

Sunday, June 18, 2023

Anosmia- More Than Just the Loss of Smell

Echolocation Experiment at Home

The definition of echolocation is just as it sounds. Using your own voice to echo off objects around your environment. It is a method used by not only bats, but humans as well. Blind people use this method to navigate around their world. As we know, blind individuals cannot see. Or, if they can, it is slight. Without sight, one can imagine the challenges that emerge in one's life. The loss of sight allows other senses to come through and play as the "eyes" of the body. In this case, the use of auditory sense kicks in and enhances as a way for blind individuals to hear sight. This type of takeover is what we call cross-sensory perception. A way to better describe this is through the textbook See What I'm Saying: The Extraordinary Powers of Our Five Senses. In the book, the author states, with sensory loss, the visual brain responds to sound and touch. While the auditory brain can respond to touch and sight (Rosenblum 2010, pg. 297). In other words, if one is to lose their vision, they can use their hands to feel how things look and sound to determine location.

If there is one thing to know about echolocation, it is, you don't have to be blind to use it. Anyone can use echolocation. I'll prove it. Take a plate from your pantry, this won't take up any time at all. Pick the plate up and keep it at arm's length distance. Now, blow and move the plate closer. Notice as you approach your face, you can hear the force of air sound louder. Then, if you push it away from your face, the sound fades away and gets quieter. One last trick to do to really hear the difference is to blow and move the plate back and forth in a fast motion. Watch not to hit your face of course! You will notice again, when the plate is close to your face, the sound of air blowing out is louder. From the speed, it will sound like a small siren. The difference is noticeable. But, the fact is, you will notice as the sound got louder, you knew to stop and move the plate away. This auditory approach allows you to hear the future as well. Your brain knows the louder the sound, the closer the plate, time to stop. Although, your brain over-anticipates, giving yourself time to react and protect yourself. It allows your fight or flight to completely kick in (Rosenblum, 2010, pg. 54). Giving blind people enough time to react and move out of harm's way, as well as ensuring not to bump into things.

Everyone Smells Differently

I learned that everyone has their own unique scent, even twins but humans also smell differently from each other. The smell of your face wash can smell like candy but to others it wont. The receptors in our nose control the sensors which decides how we smell odors. There is 400 genetic codes for the receptors in our nose and many more variations of these genes. When the receptors of two people are compared, it is usually 30 percent different from each other. When one person smell an odor their receptors that are activated and the signal sent to the brain can be different from the next person.

Duke Today Staff. (2013, December). No Two People Smell the Same. Duke Today. https://today.duke.edu/2013/12/hiroodor

Sreenivas, S. (2023, May). What is Parosmia?. WebMD. https://www.webmd.com/brain/what-is-parosmia

WebMD. (n.d.). Phantosmia: Is your nose playing tricks on you?. WebMD. https://www.webmd.com/brain/what-is-phantosmia

Multi-sensory Perception- How We Taste Things

When it comes to taste, we often assume that what we taste comes from the sensory buds on our tongues. Well, it is so much more than that. We can actually smell, touch, see, and even, hear taste. This is what we call, multi-sensory perception. Essentially, multi-sensory perception is when our brains use multiple senses to work together and perceive the environment around us. According to the textbook See What I'm Saying: The Extraordinary Powers of Our Five Senses, not only do all of your senses have direct influences on taste. They also all have indirect influences on taste that work together and influence each other (Rosenblum, 2010, pg. 134). Working together to enhance the experience of taste.

An example of how multi-sensory perception works on taste can be seen by simply eating a salad. Imagine you have a bowl of Caesar dressing salad in front of you. Before you even take a bite, you smell the dressing and the mixed ingredients. This on its own already enhances the way the entire dish will taste. Essentially, the odor of the salad creates this expectation in your head as you can already imagine what the meal will taste like. Your brain uses memory as well to distinguish the flavors. Now, when you take a bite, the crunching sound of the salad and croutons creates this pleasurable flavor as it works together with texture. This coincides with touch, as people have preferences for the feeling of what they eat. After all, having a soggy salad would actually create a displeasing taste in one's mouth. Speaking of pleasing, the entire sight of the salad itself is what also makes the food taste better, on top of the taste it provides. The appearance of food influences how appetizing one might perceive their meals (Rosenblum, 2010, pg. 123). A huge role in this is seen through color and memory. If you don't see the food you are used to eating, you won't entirely find it as appealing or interesting as you normally would. Or, if the meal, in this case the salad, has dull colors to it, you might look at it as flavorless. On top of all these senses, the obvious one that works with these is taste. As we start to chew, all the flavors of what was in the bowl combined together and create this salty palate from the cheese, dressing, and lettuce (and if you were to add chicken).

Overall, it isn't just taste that uses multi-sensory perception, all the senses use it. The example above is just a depiction of how taste is enhanced and or distinguished through more than just our tongues. We don't even realize the small inputs we take in every day. Think of it this way, we know by memory what water looks like. If someone were to give you a class and said it is water, but it had a yellow tint to it, we wouldn't find it appealing at all. By using our eyes, we were able to "taste" how unappealing it is. Even if it had a weird odor, we can smell how unappetizing it taste as well. So, take notice the next time you eat something. Make a note in your brain of all the senses you never really realized before. Notice how they all work together to make the perfect flavor.

Friday, June 16, 2023

Mirror Neurons- Your Brain Is Imitating all the Time

Before Neurophysiologist Giacomo Rizzolatti and his colleagues discovered a class of cells called "mirror neurons", scientists were under the impression that the brain has a set way to control and coordinate your body. Before Rizzolatti, it was believed that there were set parts of your brain responsible for action planning, meaning if you want to control or move your body your brain has the premotor and parietal cortexs to help you do so. In the mid 90s Rizzolatti how cells in the motor area of a monkey's brain was associated with planning hand and mouth movements. They were monitoring the cells while the monkey was grabbing raisins and eating them. Once the monkey took a break from eating the raisins, an experimenter reached for the raisins. Although the monkey did not reach for the raisins, it watched the experimenter do so and parts of the motor brain (motor cells) reacted as if the monkey was the one reaching for the raisins. Basically "monkey see, monkey brain do".

The researchers later found that certain motor cells responded to certain type of stimulation, for example seeing an action being performed involving a hand movement resulted in the cells corresponded with hand movements to be triggered. Same with mouth movements. They classified these cells as "mirror neurons". With time scientists found that there are different subtypes of mirror neurons, some respond to very definitive details of actions. For example a subtype may be responsive to the specific movement of someone using their thumb and index finger grasping an object. Another subtype may be responsive to the broad movement of using any part of the hand to grab the object. Many of these mirror neurons respond to multisensory information; for example, the cells that interpret hand tapping actions are responsive to both the sight and sound of the tapping. Even if the actual target that is being grabbed is visually obstructed, the mirror neurons can basically assume that the subject is going to grab something. This has some scientists thinking, these mirror neurons don't only registered a recognized action, they register the subjects' (animal or human) intention, although it is a controversial idea.Thursday, June 15, 2023

Attractiveness and Reproduction

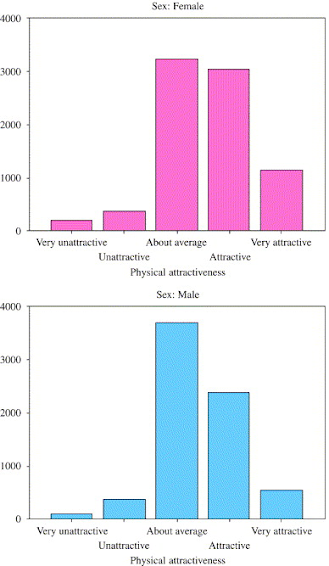

An individual's physical attraction to another person plays an active role in reproduction. Markus Jokela ran a study in 2010 for "Physical attractiveness and reproductive success in humans: Evidence from the late 20th century United States". Jokela gathered that very attractive women had 6% more children than their less attractive counterparts. In comparison, men in the lower threshold had 13% fewer children than men with the average number of children. These associations are not fully accounted for but can be a factor in increased marriage probability.

Due to physical attractiveness playing a part in seeking partners, people evolved to seek individuals they are physically attracted to as partners. Physical attractiveness has not been examined directly for increased fertility but does expect attractive people to have higher chances of reproductive success. In a 2007 study, Kanazawa argued that beautiful parents have more daughters than sons because attractiveness is biased toward sex. A female offspring is expected to benefit from genetics by inheriting "attractiveness" compared to male offspring.

Jokela believes that people searching for a partner to have children with are less likely to pick a beautiful partner because beautiful mates are more likely to leave them for another partner.

Predicting adult sociodemographic and fertility outcomes by adolescent attractiveness.

| Predicted outcome | ||||

|---|---|---|---|---|

| Years of marriage | Education | Interbirth interval | Male offspring (%) | |

| Women (n=1244) | ||||

| Not attractive | 16.7(16.1–17.3) | 13.2 (13.0–13.4) | 2.81 (2.57–3.06) | 52.9 (49.4–56.3) |

| Moderately attractive | 17.2(16.2–17.8) | 13.2 (13.0–13.4) | 2.92 (2.67–3.19) | 50.1 (46.5–53.6) |

| Attractive | 18.0(17.4–18.6) | 13.3 (13.1–13.6) | 2.82 (2.58–3.07) | 50.5 (47.2–53.8) |

| Very attractive | 18.0(17.4–18.7) | 13.4 (13.3–13.6) | 3.09 (2.83–3.36) | 53.1 (49.7–56.5) |

| p for linear trend | 0.001 | 0.05 | 0.05 | 0.89 |

| Men (n=997) | ||||

| Not attractive | 23.8 (22.9–24.7) | 14.2 (14.0–14.5) | 3.44 (3.06–3.84) | 53.6(50.3–57.0) |

| Moderately attractive | 24.5(23.6–25.4) | 13.9 (13.6–14.1) | 3.30 (2.94–3.68) | 52.0 (50.0–54.2) |

| Attractive | 25.2(24.3–26.1) | 14.1 (13.8–14.4) | 3.20 (2.85–3.58) | 50.4 (48.2–52.5) |

| Very attractive | 24.9(24.0–25.8) | 13.8 (13.5–14.1) | 3.26 (3.00–3.75) | 48.7(45.5–51.9) |

| p for linear trend | 0.05 | 0.12 | 0.56 | 0.07 |

Sites:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3000557/

https://www.sciencedirect.com/science/article/pii/S0022519306003079?casa_token=sxVIupjBIlAAAAAA:5Dksfy_pun8P-11hw3z3Jq5XuLyKOytMFh42Lf7b3IGxv3uzIO0KLgnVezxuGGFcaFJj5_ezBM0

You Read Lips- The McGurk effect

Through development from birth, humans have an automatic tendency to read lips. It is part of the way we interpret communication subconsciously. When we are in a loud room trying to talk to someone you lip read. If we are talking to someone with an accent and is a little harder to understand, we lip read. Even if you are not in a loud room or talking to someone with an accent, we still lip read. It's a part of human nature from the first language you learn until the day you die, you will lip read subconsciously (or consciously). Whenever you are able to see the person who is communicating with you, you use their lips (and facial information/expressions) to better enhance the words coming out of their mouth.

The McGurk effect is a phenomenon introduced by cognitive psychologist Harry McGurk and it demonstrates how much the brain relies on the use visual speech information when communicating through auditory speech. The McGurk effect is an illusion where you are hearing one sound, being shown a second sound but what your hearing is being overridden by what you are seeing. Here is how to test out this phenomenon; Get two friends and have one stand in front of you, and one behind you. Have the friend standing behind you repeat "ba, ba, ba..." and in the same time have the friend in front of you mouthing "va, va, va". Although to begin you do hear your friend saying the ba syllable behind you, but as you focus on the friend in front of you that "ba" sound turns into a "va" sound. In the labratory of Lawrence Rosenblum, they found that the visible "va" overrides the auditory "ba" 98% of the time. That's important to consider because that is proof lip reading is an important part of auditory communication.

The McGurk effect can occur in many different ways, another example is hearing a sound, being shown a second sound, but hearing a third undisclosed sound. For instance, you have two friends like in the first experiment, one behind and one in front, and they are both saying two different syllables "ba" behind and "ga" being mouthed in front. While you are focusing on your friend in front, you are perceiving the "ba" as third noise, "da". In this case, your being compromised between what you're seeing and hearing and this is why. If you articulate those three syllables, "ba", "ga", and "da" slowly you will realize that "ba" "da" and "ga" are all correlated in the sense of how you use your mouth to articulate those words.

If you do want to test this phenomenon and you are alone, there are videos on youtube of people performing the McGurk effect, they have an audio going on in the background while they are mouthing a different syllable/word.

Wednesday, June 14, 2023

Sommelier

A sommelier is a knowledgeable wine professional who specializes in all areas of wine service. They often work in fine dining restaurants, hotels, wineries, or wine shops, assisting customers in selecting and enjoying wines that compliment their meals or personal preferences. When offering matching recommendations, they examine factors such as acidity, sweetness, tannins, and the overall balance of a wine. This ability to establish harmonious mixtures improves the eating experience by allowing customers to completely appreciate the flavors of both the cuisine and the wine.

That all being said, Sommeliers have a heightened sense of taste in order for this all to be possible. Although some people are born sensitive to different tastes, a lot of Sommeliers undergo sensory training programs that focus on enhancing their ability to identify and describe the various components of a wine, such as acidity, sweetness, tannins, and aromas. These programs include exercises like blind tastings, where sommeliers taste wines without knowing their identities to sharpen their ability to detect subtle differences. From there sommeliers learn about the interplay of flavors and how they interact with various types of cuisine by tasting wines. They can identify which wines will compliment or enhance specific cuisines by examining the flavor profiles of wines. They can use this talent to create balanced and memorable meal and wine pairings that enhance the dining experience.

ECHOLOCATION

Some animals, including some humans, have a unique ability called echolocation. It resembles having a built-in sonar system similar to the one used by submarines. Using vocalizations, such as a click or a shout, sound waves are carried via the water or the air. The sound then returns like an echo when it strikes an object. The individuals or animals may determine the location of the items, their distance from them, and occasionally even what they appear to be by carefully listening to these echoes. It's similar to employing sound waves to virtually "see" the environment around them even though they are blind. They can navigate, obtain food, and avoid obstacles thanks to their ability.

In everyday life, Echolocation can help people with visual impairments become more independent and mobile. They can confidently move through crowded areas, find specific objects in their environment, and navigate busy streets by intentionally listening to the echoes rebounding off nearby objects. They can comfortably navigate the world thanks to echolocation, which also improves their general quality of life and makes it possible for them to engage in a variety of activities to a greater extent.

In the book, See What I'm Saying: The Extraordinary Powers of Our Five Senses, A cool example of this was when the author spoke about how the blind use Echolocation while biking in different terrains. His example showed how those trained to echolocate were able to pick up on even the smallest rock that might get in their way. Biking is an activity that must originally feel impossible to those who are visually impaired, however learning to echolocate gives them a new sense of individuality.

ANOSMIA

The term "anosmia" describes a partial or total loss of scent perception. It can affect people of all ages and be either temporary or permanent. Nasal congestion, head trauma, certain drugs, neurological conditions, and viral infections like the common cold or influenza are just a few of the causes of anosmia. In recent years, Anosmia has been more publicized as it has been linked to Covid 19!

This specifically caught my attention due to the fact that my mother lost her smell after having covid. She struggled with smell and also has explained to me how it has shifted her taste more than she expected. It's been 2 years and she still has not been able to smell. After reading the book about the condition and looking into it on my own I am realizing that she most likely now suffers from anosmia.

The absence of smell, or anosmia, can significantly affect daily life. Several parts of our daily activities are impacted by our sense of smell, which is essential to our total sensory experience. For instance, eliminating the capacity to smell might significantly alter how we perceive flavor. Because the complex sensations we ordinarily experience are entwined with our sense of smell, food may start to taste less good and appear less attractive. This may result in a decrease in appetite and associated food habit changes. Anosmia can also compromise one's sense of smell, which is crucial for detecting things like gas leaks, rotting food, and smoke. Something as simple as smell can impact someone's life hugely and that is something those with Anosmia have to experience everyday.

You Can Taste Scenes- Tongue Display Unit (TDU)

Picture A shows the Tongue Display Unit (TDU)

Picture A shows the Tongue Display Unit (TDU)

The tongue display unit is a version of a tactile aid device that helps visually impaired individuals in conveying visual material by using the tongue. Tactile aid devices developed after the invention of the video camera, and the original development was made in the 1960s. It included a video camera that converted its images through an array of 400 small electrodes that were placed on the back of a user. The posts would vibrate depending on what was being shown on the screen, they would have a specific pattern of vibration for specific images. The subject would then guess what was being shown through the camera based off the vibrations on their back. To begin, the subjects were not able to decipher what the vibrations meant, they were just vibrations. With as little as a few hours, the subjects were able to analyze the patterns and create a mental image of what is being "shown" to them. It is not only able to work when the electrodes are on the subjects back, it is able to be moved around the exterior of their body and it would have no effect on their analyzation of the image. this goes to show that the brain portrays the skin as a sense organ that can distinguish patterns of touch.

Picture B shows the original version of visual tactical aid device created by Neuroscientist Dr. Paul Bach-y-Rita

Picture B shows the original version of visual tactical aid device created by Neuroscientist Dr. Paul Bach-y-RitaTuesday, June 13, 2023

The Perception of Beauty and Attraction

Ever wonder what's your type? How does facial symmetry and the perception of beauty affect attraction? It starts back to when we are very young, as it has been proven that babies prefer attractive faces and symmetrical faces over any other. Symmetry within faces are more easily perceived as well as recognizable, so if a baby is staring at your face they are studying the patterns within the symmetry of your face as well as forming some sort of recognition. Our brains naturally look for patterns, this helps to better make decisions as well seek more attractive faces. This is why our brains seek out more symmetrical faces because more patterns can be found within the features. Scientists found then when manipulating the facial symmetry of an individual they can study how it's attractive, and how it compared to the natural symmetry of the face in the perception of beauty. The results were a gradient: when symmetry was decreased on a face it was less attractive, when the face was increased in symmetry it was found attractive ( mirror images of a face), and the more naturally symmetrical faces were the most attractive.

Monday, June 12, 2023

Lip Reading

Lip reading is an extremely useful tool for people who are hearing impaired, it makes conversations super easy for them. Deaf people will watch the body, hands, and mouth of the person they are listening to, to understand what they are saying and which tone and volume they are using. The struggle with this is that many words sound similar which can start confusion. Most rhyming words can screw up someone who is lip reading, but with proper context clues the lip reader can more easily make out which words the speaker is saying. There are other factors that can mess up a lip reader such as people that talk super fast or have a lot of facial hair.

One of my best friends is hearing impaired and if his hearing aids die he tends to read our lips when we are hanging out. He doesn't use this everyday so he is not great at it but he can make most of the words out with context clues and focus. By him doing this it shows how impressive of a skill this is, it's like learning a whole nother language. You can only really learn this skill too if you are someone who is hard of hearing because you can unintentionally cheat if you try to learn and you can hear.

Echolocation

Echolocation is the process in which mainly animals will make a sound and based on how that sound reflects off of objects in the vicinity they will know what is around them and how far it is away from them. Animals use this process to hunt prey when they can not see. Most commonly known to be used by bats, they hunt at night by using echolocation. They use this in the most impressive way in my opinion, they not only get an understanding of every object in their way, they can also locate whichever animal they are hunting. Different animals have different uses for echolocation, but its main use is for hunting prey.

Echolocation can be learned by humans and blind people often learn this skill, but it is not as effective as it is for certain animals. We have a specific part of our brain that processes echoes which makes this possible. Unlike for animals when humans use echolocation the circumstances need to be right. For blind people to effectively use echolocation they need their surroundings to be quiet and it also helps if what they are trying to locate is not moving. Submarines will use echolocation when moving underwater while it is dark and they do this with repetitive beeps to get an understanding of how far an object is ahead of them.

Synesthesia

Synesthesia is a very uncommon and complex neurological condition that affects people. It is the process of taking in information through one of your senses but it ends up triggering a different sense. For example, someone may hear the name Carl and think of the color yellow. It may have meaning but it can also just be for no reason. The most common version of synesthesia is grapheme color synesthesia. This just means that they get information through their senses and it triggers a color in their mind. One way I can relate this to myself is whenever I need a folder for math class I prefer it to be red because I associate that color with that class, but I do not have this condition.

While learning about this topic during this class it blew my mind that this is real. I had never heard of synesthesia and it does make sense to me that this happens, but it really is mind blowing. The biggest issue I could see someone with synesthesia going through would be that they worry that they are misunderstood and that their true self may come off as weird compared to other people. When in reality it is pretty cool that people think differently in this way. I could not find information on whether or not it is genetic but there is no cure. This is not that big of a deal considering it doesn’t affect their daily life too much.

Faces

Facial perception is an individual's understanding and interpretation of the face. Just from looking at someone's face you can recognize their identity, gender, emotional state, intentions, genetic health, etc. Subliminal faces can influence mood, behavior, and facial expressions. I am always told by someone to "fix my face" because simply I cannot hide my emotions.

Facial recognition: This means you are able to compare faces and identify which faces belongs to which person. Facial recognition can identify human faces in images or videos, and determine if the face in two images belongs to the same person.

Emotion detection: This means you are able to classify the emotion on the face as happy, surprised, fear, sad, angry, irritated, etc. The most common way one can detect emotions from someone else is by analyzing the characteristics of their voice signal.

Facial detection can be important to use today because it is helping with facial analysis. I believe this has a huge impact on society today because not only does it help determine gender, age, or feelings but it is used in law enforcement, mobile technology, and banking and finances. Facial recognition is important to use because it does it's own work by verifying a person. By doing so, this is now providing you with accurate information and security. Emotion detection is important to use because you are able to recognize someone's emotions through their facial expressions. You can then use the information from their expressions to reveal their emotional state.